Edge computing is reshaping digital twin security by enabling faster, localized threat detection. Instead of relying on cloud systems, edge computing processes data near its source, reducing delays and improving response times. This approach is critical as digital twins - virtual models of physical assets - become increasingly integral across industries like manufacturing, healthcare, and infrastructure.

Key Takeaways:

- Why it matters: Digital twins rely heavily on IoT data, but transmitting this data to the cloud introduces latency and security risks.

- How edge computing solves this: By processing data locally, edge systems detect threats in milliseconds, minimize bandwidth use, and reduce vulnerabilities.

- Real-world results: In August 2025, edge-based intrusion detection identified cyberattacks in under 500ms with a 96.3% accuracy rate.

- Scalability: Edge computing supports large-scale systems by distributing processing across multiple nodes, ensuring efficiency and reliability.

Quick Overview:

- Latency: Sub-500ms detection speeds.

- Security: Local processing reduces risks during data transmission.

- Industries: Used in manufacturing, healthcare, transportation, and more.

- Future trends: AI and 6G networks will further enhance edge computing capabilities.

Edge computing is not just a technical upgrade - it’s a necessity for secure, efficient digital twin operations in today’s data-intensive world.

Why Are Edge Servers Critical For Network Edge Security?

Benefits of Edge Computing for Intrusion Detection

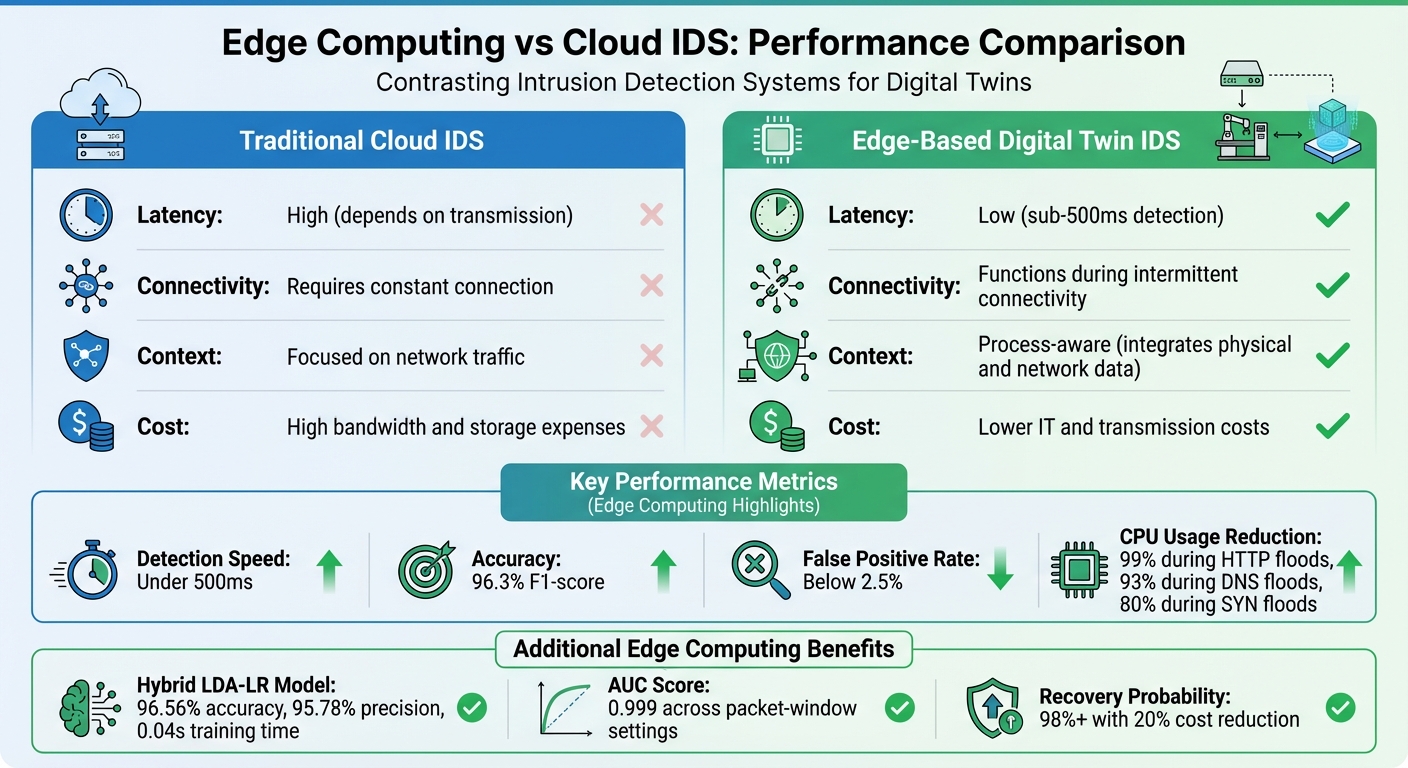

Edge Computing vs Cloud IDS Performance Comparison for Digital Twins

Edge computing processes data right where it’s generated, speeding up intrusion detection and response times. By shifting from centralized cloud analysis to local processing, it delivers measurable gains in detection speed, accuracy, and overall system reliability.

Real-Time Threat Detection

Handling security data locally removes the delays often seen in cloud-based systems. For instance, in August 2025, a Digital Twin-driven Intrusion Detection framework tested in a simulated water treatment plant successfully identified false data injection, denial-of-service, and command injection attacks in under 500 milliseconds. It achieved an impressive 96.3% F1-score with false positive rates below 2.5%.

"Edge computing makes real-time computing possible in locations where it would not normally be feasible and reduces bottlenecking on the networks and datacenters that support edge devices." - Microsoft Azure

Edge nodes tackle intensive anomaly detection tasks on-site, sending only the most relevant alerts to central systems. For example, edge AI-enabled security cameras process video footage locally, transmitting only critical clips. This approach not only reduces network congestion but also ensures robust security coverage without sacrificing efficiency.

These advancements in real-time detection pave the way for quicker responses in environments where every second counts.

Lower Latency and Faster Response Times

Edge computing doesn’t just detect threats quickly - it also enables faster responses. This speed is critical in industries where even a slight delay can lead to serious vulnerabilities. Unlike cloud-based systems, which often introduce delays, edge computing ensures near-instant detection, making it ideal for time-sensitive industrial settings.

The performance edge is particularly noticeable in dynamic environments where threats constantly evolve. For example, a lightweight anomaly detection system deployed on edge nodes achieved an AUC of 0.999 across various packet-window settings. Even more striking, edge-based detection reduced average CPU usage by 99% during HTTP floods, 93% during DNS floods, and 80% during SYN floods compared to systems relying solely on centralized detection.

In another example, researchers in April 2021 developed an online anomaly detection system for a LiBr absorption chiller used in large building HVAC systems. By using edge computing, the system deployed algorithms near the physical equipment, combining historical and real-time data to monitor health and detect potential failures early.

Better Scalability for Large Systems

Edge computing isn’t just about speed - it’s also about handling scale. Industrial operations generate enormous amounts of data, which can overwhelm centralized systems. By distributing processing across multiple edge nodes, organizations can effectively monitor complex networks with thousands of connected devices. For instance, a hybrid LDA-LR model for edge security demonstrated 96.56% accuracy and 95.78% precision, with training times as short as 0.04 seconds - a level of performance that supports deployment across large-scale industrial systems.

| Feature | Traditional Cloud IDS | Edge-Based Digital Twin IDS |

|---|---|---|

| Latency | High (depends on transmission) | Low (sub-500ms) |

| Connectivity | Requires constant connection | Functions during intermittent connectivity |

| Context | Focused on network traffic | Process-aware (integrates physical and network data) |

| Cost | High bandwidth and storage expenses | Lower IT and transmission costs |

"Edge computing helps to mitigate [security] risk by allowing enterprises to process data locally and store it offline. This decreases the data transmitted over the network and helps enterprises be less vulnerable to security threats." - Microsoft Azure

Edge computing also shines in remote or isolated environments, such as oil rigs, ships, or mining sites. These locations often face unreliable or intermittent internet connectivity. By processing data locally and sending only essential insights when a connection is available, edge computing ensures uninterrupted security monitoring and protection, no matter the circumstances.

System Architectures and Detection Algorithms

To build secure digital twins, a four-layer model is recommended: edge, fog, cloud, and monitoring. Each layer has a specific role - edge handles real-time IoT sensor data, fog focuses on aggregating data and managing twin migration, cloud provides long-term storage and deep analysis, and monitoring oversees intrusion detection and resource management. This structure creates a strong foundation for deploying effective real-time detection algorithms.

Edge Computing Architectures for Digital Twins

Security at the edge is crucial. One way to enhance it is by isolating compromised devices or data streams without disrupting nearby nodes. Lightweight containerization tools, like Docker, are particularly useful here. They allow digital twin instances to be packaged and migrated efficiently during breaches or node failures.

A standout feature of edge-based intrusion detection is its independence from specific IoT protocols. By focusing on TCP/IP traffic instead of device-specific protocols like ZigBee or LoRa, a single intrusion detection system can protect diverse industrial networks. This eliminates the need for multiple security solutions tailored to different communication technologies. Additionally, this approach aligns with ISO 23247, a standard for manufacturing digital twin architectures. The standard increasingly incorporates edge-level capabilities like local data capture and preprocessing.

Algorithms for Real-Time Detection

Edge nodes play a dual role in detecting threats. They use unsupervised clustering methods (e.g., k-means, DBSCAN) to spot unusual traffic patterns and pair these with supervised classifiers (e.g., KNN, Random Forest, Logistic Regression) to categorize traffic as benign or malicious. Among these, k-means has shown stronger performance in Industrial IoT traffic analysis, achieving a silhouette score of 0.612, compared to DBSCAN's 0.473.

Hybrid models bring even greater precision and adaptability. For instance, combining LDA with Logistic Regression has delivered 96.56% accuracy and 95.78% precision, with training times as short as 0.04 seconds. This speed makes it highly effective for addressing emerging threats. To further enhance system resilience, optimization algorithms like Hybrid Genetic-PSO have proven valuable. They achieve recovery probabilities above 98% while cutting migration costs by 20%.

sbb-itb-ac6e058

Implementation Strategies and Best Practices

Key Deployment Considerations

Having reliable detection algorithms is just the beginning - how you implement them can make or break their effectiveness.

Start with hardware that supports the demands of edge processing. Many successful setups use compact devices like edge nodes or industrial gateways (e.g., Raspberry Pi). These devices can run virtualization hypervisors and handle machine learning inference, all while fitting seamlessly into industrial environments.

On the software side, proper architecture is crucial. Deploy intrusion detection systems within isolated virtual machines to minimize the trusted computing base. Tools like NixOS can simplify this process by offering reproducible builds and secure, declarative configurations, making maintenance across multiple edge locations much easier.

Network design also plays a big role. Bandwidth constraints should guide the configuration of edge nodes. For instance, analyzing only the first 60 bytes of IP packets can optimize performance on resource-limited hardware. However, keep in mind that edge nodes are vulnerable to risks like unauthorized remote access or physical tampering.

Adding Edge Computing to Existing Digital Twins

Incorporating edge computing into digital twins doesn’t have to be overwhelming. Start small by offloading data preprocessing tasks - like cleansing, feature extraction, normalization, and dimensionality reduction - to edge nodes. This approach allows you to keep leveraging your existing cloud-based analytics while adding real-time processing capabilities at the edge.

Anomaly-based detection is particularly useful for identifying zero-day attacks that traditional rule-based systems might miss. Since signature-based tools can increase memory usage by up to 140% over baseline, edge nodes can act as first-stage filters, sending only the most suspicious traffic to more resource-intensive analysis tools.

For a faster response to threats, integrate detection modules with automated systems. For example, you can configure iptables to automatically block IP addresses flagged as anomalous. This kind of setup has been shown to cut average CPU usage by nearly 99% during HTTP flooding attacks.

Once edge computing is in place, protecting the processed data becomes a top priority.

Data Security and Privacy Measures

Securing both the data and the devices is essential to fully realize the benefits of edge-based intrusion detection.

Adopting a Zero Trust Architecture is a good starting point. Every access request - whether at the device, edge, or cloud level - should be authenticated and encrypted to prevent lateral movement by attackers. This approach helps mitigate risks from both external threats and insider attacks.

Another key step is to anonymize sensitive data during preprocessing. For example, removing MAC and IP addresses from packets during analysis can help maintain compliance with data protection standards while still preserving detection accuracy. By keeping raw sensitive data local, you significantly reduce the risk of breaches.

Physical security cannot be overlooked. Edge nodes are often deployed in public or industrial areas, making them susceptible to tampering. Protect these devices from unauthorized physical access and monitor network parameters like signal strength and packet rate at the gateway level. Sudden changes in these metrics can indicate Sybil or jamming attacks. Combining physical hardening measures with strong authentication for edge-to-cloud and edge-to-device communications creates a multi-layered defense that safeguards industrial machinery from unauthorized control.

Future Trends and Developments

AI-Driven Edge Computing Advances

Artificial intelligence is reshaping edge threat detection by employing continuous learning models that adjust in real time to evolving attack patterns. This eliminates the need for manual updates, ensuring systems stay one step ahead of threats.

In edge-IIoT digital twin environments, deep learning models have achieved impressive results, with 96% accuracy and a 0.94 precision rate for detecting DDoS attacks. AI-powered digital twin frameworks for 6G networks have shown an attack detection success rate of 98%. Beyond security, these systems deliver measurable operational gains - cutting latency by 12%, energy use by 15%, and RAM consumption by 20% compared to traditional static models.

"The synergy resulting from the combination of digital twins and edge-IIoT creates a very potent framework for improving operational efficiency, reducing downtime, and facilitating seamless integration." – Feras Al-Obeidat, Zayed University

Another exciting development is co-simulation, a technique that runs multiple security models simultaneously within a digital twin. This approach identifies interdependencies and flags deviations from normal behavior patterns. Digital Security Twins (DSTs) take this a step further by gathering data directly from live code, uncovering vulnerabilities in production environments before attackers can exploit them.

These advancements are paving the way for next-generation networks, where the emergence of 6G will redefine the landscape of connectivity and security.

6G and Next-Generation IoT Networks

The arrival of 6G networks is set to revolutionize how edge computing tackles security challenges. These networks promise ultra-low latency and massive connectivity, enabling real-time synchronization between physical assets and their digital twins. However, they also bring new complexities, including expanded attack surfaces and greater device diversity.

"The fact that 6G networks entail ultra-low latency with massive connectivity and edge-native intelligence accentuates attack surfaces with more distributions and heterogeneity." – Scientific Reports

Building on the benefits of low latency and localized processing, 6G networks introduce edge-native intelligence, where intrusion detection shifts from centralized systems to decentralized, autonomous security agents. For instance, a lightweight federated IDS framework for 6G IoT systems has shown energy savings of nearly 60% and a 70% reduction in communication overhead, all while maintaining detection accuracy above 93%. These systems also reduce false positives by up to 30% using spatio-temporal uncertainty-aware filtering.

Centralized processing of massive data volumes is no longer feasible. Instead, edge-based processing becomes indispensable. 6G Edge of Things (EoT) architectures integrate digital twin networks to create digital replicas of the edge layer itself, enabling proactive security monitoring and real-time threat mitigation.

Applications Across Industrial Sectors

These cutting-edge technologies are unlocking new opportunities for industries to enhance both operational efficiency and security. Each sector is finding unique ways to leverage these advancements:

- Manufacturing and smart factories: Real-time quality monitoring and predictive maintenance are now possible, with edge computing handling massive datasets locally to safeguard sensitive operational information.

- Transportation systems: Autonomous vehicles, railway signaling, and port equipment rely on 6G-enabled edge computing for low-latency services, where security failures could have severe consequences.

- Critical infrastructure and construction: Digital twins are used for seismic bridge monitoring, underground inspections, and seawall condition tracking. Advanced fault tolerance mechanisms in 6G edge setups achieve recovery probabilities exceeding 98%, ensuring systems remain functional even during hardware failures.

- Agriculture and environmental monitoring: Edge-based digital twins help with crop disease detection and yield forecasting, while robust security measures protect proprietary farming data.

- Healthcare systems: Networked digital twins enable real-time patient monitoring, with high-availability edge frameworks ensuring medical data stays synchronized and secure.

A shared priority across these sectors is the need for immediate processing and response. By keeping sensitive data local, edge computing minimizes the attack surface, avoiding the risks associated with transmitting raw data to centralized clouds. These implementations not only enhance security but also deliver cost savings and performance boosts across diverse applications. This technological evolution strengthens digital twin security while driving operational improvements across industries.

Conclusion

Summary of Edge Computing Benefits

Edge computing is transforming how digital twins detect intrusions by moving data processing closer to the source. This approach enables real-time threat detection, minimizing delays caused by cloud transmissions. It also optimizes bandwidth by ensuring only the most relevant security alerts are sent across the network, while local data processing adds an extra layer of privacy protection. Organizations leveraging edge computing have seen maintenance costs decrease by 20% to 30% thanks to predictive capabilities, with advanced frameworks delivering energy savings and reducing communication overhead.

By combining digital twins with edge computing, businesses can stay ahead of potential threats and address them proactively.

Implementation Recommendations

To fully unlock the potential of edge computing, organizations should consider the following steps:

- Begin processing sensor data at the edge for immediate security monitoring.

- Use a layered architecture where edge nodes handle time-sensitive tasks, employing lightweight algorithms like Random Forest or kNN to achieve high accuracy without overwhelming hardware resources.

For existing systems, ensure real-time synchronization between physical assets and their digital twins to maintain accurate threat detection. Explore federated learning methods to enable edge nodes to share global threat intelligence without transferring raw data. Additionally, implement differential privacy techniques to protect individual data points during model updates.

The Path Forward

As technology advances, emerging 6G networks and edge-native intelligence are set to further boost these capabilities. Self-evolving AI models will adapt to counter new threats automatically, while energy-efficient systems will balance security needs with power limitations.

"Litmus Edge simplifies the data representation and utilization process. Its digital twin technology allows users to troubleshoot equipment in real-time and manage data from multiple streams, catering to specific use cases and organizational needs." – Vishvesh Shah, Litmus

Industries like manufacturing and healthcare are already proving that edge-powered digital twins enhance security while delivering measurable operational improvements. Platforms such as Anvil Labs are leading the charge, helping organizations secure critical infrastructure as next-generation networks become a reality.

FAQs

How does edge computing enhance the security of digital twins?

Edge computing plays a key role in boosting the security of digital twins by handling data right at the network's edge - closer to where it originates. This setup not only cuts down on latency but also enables real-time threat detection and anomaly tracking. By ensuring the digital twin stays in constant sync with its physical counterpart, edge computing supports on-the-spot threat management, reducing the chances of sensitive data being exposed to external systems.

Another advantage of localized data processing is that raw data doesn’t need to be sent to the cloud, which significantly lowers risks tied to data transmission and storage. This makes edge computing an essential tool for safeguarding digital twin environments, especially in industries where maintaining data integrity and speed is critical.

What are the benefits of using edge computing for intrusion detection in digital twins?

Edge computing brings some standout benefits when it comes to intrusion detection in digital twin systems. By handling data processing right where it's generated, it minimizes latency, allowing for real-time threat detection and response - a crucial factor for maintaining system security. Plus, this localized approach cuts down on bandwidth usage since there's no constant need to send data back and forth to a centralized cloud.

Another advantage is its ability to enhance machine learning performance. Edge computing enables faster model updates and deals with threats directly at the source, ensuring more precise detection while reducing the chance of disruptions across the entire system. These features make edge computing a smart choice for securing digital twin environments efficiently and effectively.

How do AI and 6G networks improve edge computing for digital twins?

Artificial intelligence (AI) is transforming edge computing for digital twins by enabling advanced analytics to operate directly on edge devices - right where sensors and equipment are located. This includes powerful tools like machine learning-based anomaly detection, predictive maintenance, and adaptive threat response. By processing data locally, these systems achieve lightning-fast response times, with latency often reduced to sub-second levels. Plus, it cuts down on bandwidth usage and supports real-time decision-making. For instance, AI can identify and block cyberattacks, such as DDoS attacks, instantly, all while keeping operations running smoothly.

The arrival of 6G networks is set to elevate this even further. With ultra-low latency (under 1 millisecond), blazing-fast data speeds, and enhanced reliability, 6G will allow edge servers to sync digital twins with their real-world counterparts almost instantly. This means simulations will be more accurate, and systems can react more quickly to emerging threats. Together, AI and 6G create a cutting-edge environment for digital twin systems that is both secure and highly efficient.

For platforms like Anvil Labs, these advancements translate into quicker intrusion detection, sharper analytics, and seamless device integration. This ensures a smooth and responsive user experience, perfectly aligned with U.S. standards for currency ($), dates (e.g., December 21, 2025), and measurements.