Edge AI is transforming drone navigation by enabling drones to process data directly onboard without relying on cloud servers. This reduces delays, improves reliability in areas with poor connectivity, and enhances data security. By using hardware like NVIDIA Jetson and Qualcomm Snapdragon, drones can make real-time decisions, avoid obstacles, and optimize flight paths.

Key Takeaways:

- Faster Response Times: Local processing cuts latency to milliseconds.

- Improved Efficiency: Extends flight ranges by up to 27.28%.

- Reliable Offline Operation: Crucial for remote areas with limited internet.

- Enhanced Data Security: Keeps sensitive data on the device.

Edge AI systems combine onboard processors, external edge servers, and smart task schedulers to balance performance and energy use. Technologies like depth sensors, YOLO algorithms, and path planning further refine navigation, making drones more effective for industrial tasks like site inspections and surveillance.

This shift to onboard intelligence is reshaping how drones operate in dynamic environments, offering faster, smarter, and more efficient solutions for industrial applications.

What is Drone Edge-AI Computing?

Core Components of Edge AI Systems for Drones

Edge AI navigation systems for drones operate through three tightly connected layers: onboard processing modules, edge servers, and intelligent schedulers. Each layer plays a specific role, working together to ensure drones remain responsive while conserving power. This collaboration is what enables the real-time decision-making required for autonomous flight.

Onboard Navigation Modules

Drones are equipped with lightweight processors, such as the GAP8 or STM32 microcontrollers, to handle real-time flight operations. These compact chips, weighing just a few grams, perform essential tasks like reading IMU sensors, stabilizing the drone with PID controllers, and running reactive planning algorithms. For instance, a nano-drone weighing only 30 grams successfully avoided obstacles by processing planning algorithms directly onboard while outsourcing object detection to an edge server. The onboard module interprets metadata from the edge - like bounding box coordinates - and converts it into immediate flight adjustments, fine-tuning velocity and yaw rates in mere milliseconds.

Edge Servers for Environmental Mapping

More demanding computational tasks, such as creating depth maps or occupancy grids, are managed by external edge servers. These servers use GPU-accelerated models like SSD MobileNet V2 to handle object detection, producing detailed environmental data that would overwhelm the drone's onboard hardware. In December 2023, Carnegie Mellon University's Living Edge Lab showcased the SteelEagle platform at the Mill 19 testing ground. Here, ground-based edge servers handled all computer vision and obstacle avoidance tasks, enabling micro-drones under 250 grams to autonomously track and follow targets. As Jim Blakley, Associate Director, explained:

"In this context, compute equals weight and weight is bad. But, autonomous flight requires substantial intelligence to execute an on-going observe, orient, decide, act (OODA) loop".

Task Offloading and Scheduling

Neural schedulers, powered by deep reinforcement learning, constantly manage the balance between onboard processing and offloading. These systems optimize factors like network latency, battery usage, and task priority. The A3D framework, introduced in July 2023, dynamically determines where tasks should be executed and adjusts image compression ratios based on real-time conditions. For example, the system can transmit JPEG-encoded images to edge servers in about 120 milliseconds - much faster than RAW formats - while the scheduler ensures power consumption is balanced with navigation precision.

Technologies for Real-Time Optimization

Once tasks are distributed, making decisions in mere milliseconds becomes essential. These technologies enhance drones' capabilities, building on onboard and edge computing strategies to enable real-time navigation. Three key technologies drive this process: depth sensors for environmental mapping, object detection algorithms for identifying obstacles, and path planning algorithms for charting safe routes. Let’s dive into how these tools refine perception, detection, and decision-making.

Environmental Perception with Depth Sensors

Depth sensors, like LiDAR, provide drones with detailed 3D mapping data. By using edge AI to process this data locally, drones can react in under 10 milliseconds, a significant improvement over the approximate 100 milliseconds needed when relying on cloud servers. This rapid response is crucial for avoiding collisions, especially at high speeds.

To maintain precise positioning, even in challenging environments like warehouses or underground mines, drones combine depth sensor data with inputs from GPS and IMU systems using sensor fusion algorithms such as Kalman Filters and Bayesian Networks. This allows drones to adjust their routes instantly, ensuring smooth navigation.

Object Detection Algorithms

Object detection on the edge leverages tools like YOLO and MobileNet to identify obstacles and evaluate collision risks. In December 2025, a research team led by Kline introduced an edge-native wildlife monitoring system using YOLOv11m and YOLO-Behavior. The system achieved an inference latency of 23.8 milliseconds on GPU hardware, meeting the 33-millisecond threshold needed for 30fps video streams. During seven missions, it detected signs of animal vigilance 51 seconds before the animals fled, potentially reducing disruptive behavior by 93% compared to manual piloting.

For smaller drones, such as nano-drones, SSD-MobileNet V2 was employed in split computing setups, achieving 8 frames per second and enabling navigation speeds of 1 meter per second.

Path Planning Algorithms

Path planning algorithms convert detection data into actionable flight commands, ensuring drones remain responsive. Reactive planning uses visual cues to compute "repulsive actions", adjusting velocity and yaw rate in real time.

In April 2025, Rutgers University researchers, led by Khizar Anjum, introduced an MDP-based framework that dynamically adjusted algorithm parameters based on environmental factors like clutter and lighting. Using a Parrot Bebop drone, the team achieved real-time performance at 30 frames per second for 480x270 resolution, delivering a 42% processing speed boost while maintaining 80% detection accuracy. As Anjum explained:

"The core of our approach is an intelligent decision system that dynamically selects algorithm parameters to achieve 'sufficient' accuracy - delivering acceptable detection performance while significantly reducing computational overhead and energy usage".

Meanwhile, Princeton University researchers developed MonoNav, enabling a lightweight Crazyflie MAV (37 grams) to navigate cluttered environments at 0.5 meters per second. By using monocular depth estimation to reconstruct 3D scenes and motion primitives for collision-free paths, MonoNav reduced collision rates by a factor of four compared to end-to-end learning methods.

sbb-itb-ac6e058

Benefits of Edge AI for Drone Navigation

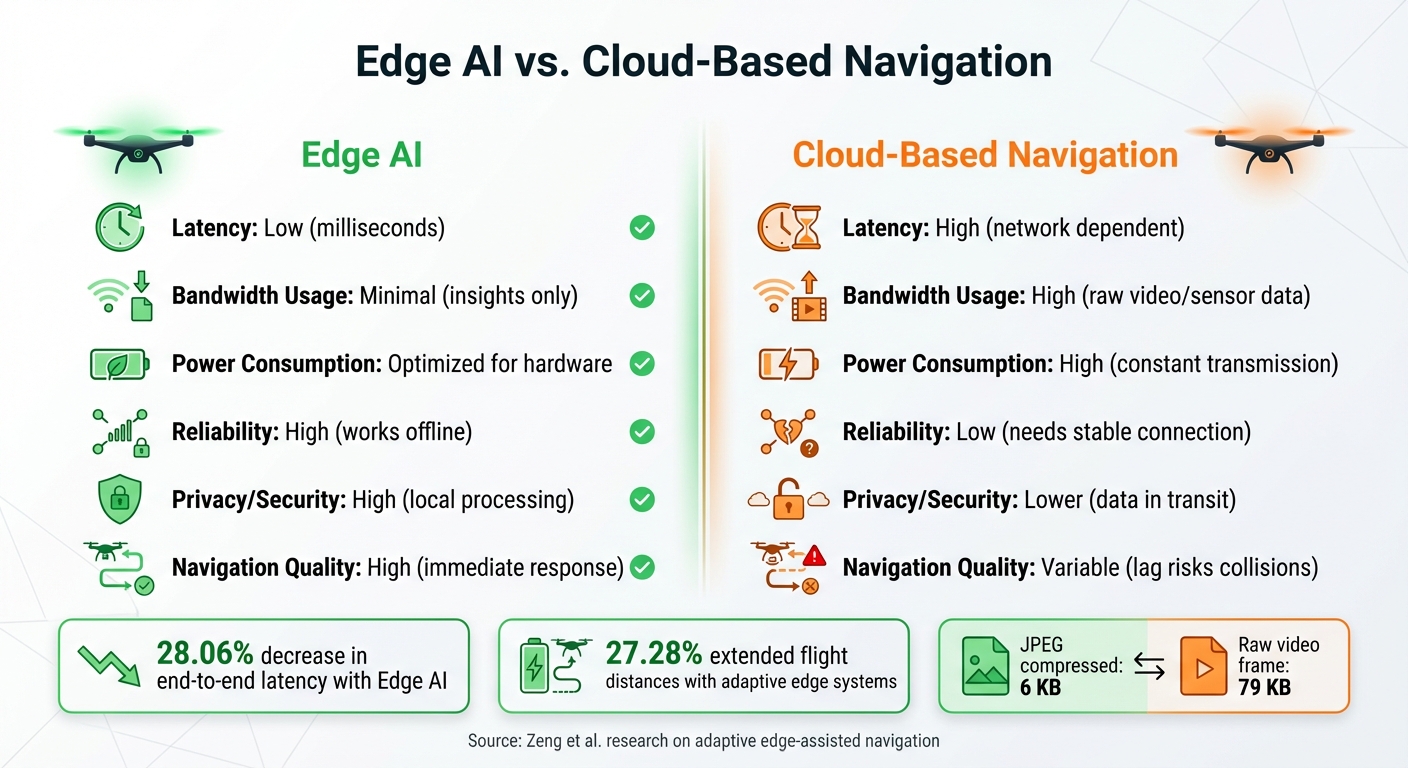

Edge AI vs Cloud-Based Drone Navigation Performance Comparison

Edge AI vs. Cloud-Based Navigation

Processing data locally on drones offers faster response times and uses fewer resources compared to relying on remote servers. This difference is especially critical in environments where split-second decisions are necessary - think construction sites with heavy machinery or warehouses with ever-changing layouts.

| Metric | Edge AI | Cloud-Based Navigation |

|---|---|---|

| Latency | Low (milliseconds) | High (network dependent) |

| Bandwidth Usage | Minimal (insights only) | High (raw video/sensor data) |

| Power Consumption | Optimized for hardware | High (constant transmission) |

| Reliability | High (works offline) | Low (needs stable connection) |

| Privacy/Security | High (local processing) | Lower (data in transit) |

| Navigation Quality | High (immediate response) | Variable (lag risks collisions) |

For example, research by Liekang Zeng and colleagues found that adaptive edge-assisted navigation decreased end-to-end latency by 28.06% and extended flight distances by up to 27.28% compared to non-adaptive systems. By removing the delays caused by data transmission, drones can react much faster to their surroundings.

Even the way data is handled makes a difference. A raw video frame might weigh about 79 KB, but compressing it with JPEG reduces the size to just 6 KB. This kind of compression significantly cuts data usage and battery consumption, making edge AI a game-changer for performance and efficiency.

Performance Improvements for Industrial Applications

Industrial settings often demand reliability that cloud-based systems can't consistently deliver. A great example comes from May 2025, when researchers Emre Girgin, Arda Taha Candan, and Coşkun Anıl Zaman showcased an edge AI-powered drone surveillance system designed for autonomous multi-robot operations on construction sites. Their solution combined a lightweight object detection model on a custom UAV platform with a 5G-enabled coordination system. This setup excelled at real-time obstacle detection and dynamic path planning, effectively meeting the challenges of a busy construction environment.

Another impressive case is Carnegie Mellon University's SteelEagle platform. This system enabled a 320-gram, off-the-shelf drone costing under $800 to autonomously track targets and avoid obstacles. By connecting to a ground-based cloudlet through a mobile network, the drone achieved high levels of performance without relying entirely on onboard processing.

For drones with limited resources, optimization techniques are essential. Advanced edge AI methods can shrink model sizes by up to 14.4x while improving energy use per inference by 78% and boosting overall energy efficiency by 5.6x. These improvements not only extend flight times but also reduce operating costs - vital considerations for industries where every extra minute in the air and every dollar saved can make a big difference.

Implementation Strategies for Industrial Applications

Integrating Advanced Sensors

Choosing the right sensors is a cornerstone of edge AI in industrial settings. For example, LiDAR excels in spatial mapping, thermal imaging is ideal for detecting heat, and orthomosaics create highly accurate site maps.

Sensor fusion plays a critical role in achieving reliable results. Back in October 2024, researchers successfully implemented the AIVIO System for inspecting power poles. This system combined an IMU and an RGB camera with a deep learning–based object pose estimator, optimized specifically for companion board deployment. The result? Closed-loop, object-relative navigation - showcasing how intelligent processing can make up for a limited sensor suite.

In areas where GPS signals are unavailable, the Bavovna.AI hybrid INS kit steps in. It integrates data from accelerometers, gyroscopes, compasses, barometers, and airflow sensors to deliver an impressive 99.99% accuracy rate. This sensor data is then processed directly by edge hardware, converting raw inputs into actionable insights.

Edge Processing Hardware

The choice of edge processing hardware can make or break an industrial AI deployment. Platforms like NVIDIA Jetson and Qualcomm Snapdragon are widely regarded as benchmarks in the field. According to NVIDIA researchers Chitoku Yato and Khalil BenKhaled:

"The robotics workflow has shifted from traditional programming to end-to-end imitation learning using foundation models like NVIDIA Isaac GR00T N1".

Here’s a quick breakdown of hardware tiers and their capabilities:

| Hardware Tier | Memory | Ideal For |

|---|---|---|

| Jetson Orin Nano Super | 8 GB | Fast object detection; lightweight models like Llama 3.2 3B |

| Jetson AGX Orin | 64 GB | Complex vision-language models; multi-sensor fusion |

| Jetson AGX Thor | 128 GB | High-end performance; 100B+ parameter models |

For most industrial tasks, the Jetson Orin Nano Super is sufficient for real-time object detection with algorithms like YOLO or MobileNet. However, if the application demands processing multiple data streams or running more complex models, stepping up to the AGX Orin makes sense. Balancing computational power with weight and battery life is crucial, particularly for applications like drones, where every gram impacts flight time.

To optimize performance, tools like TensorFlow Lite and ONNX Runtime help reduce model sizes without sacrificing accuracy. These frameworks can enable transformer-based policies to run with latencies under 30 milliseconds. This level of speed supports real-time control loops operating at frequencies above 400 Hz. Once processed, these data streams are ready for management and analysis, simplifying industrial workflows.

Using Anvil Labs for Data Management

After processing data in real time, managing it effectively is the next step to turning insights into actionable results. Anvil Labs offers a robust platform for handling the vast amounts of data generated by industrial operations, including 3D models, LiDAR point clouds, thermal images, and orthomosaics.

Anvil Labs bridges the gap between drone-captured data and actionable site management. It allows users to compare current drone footage against original design models to monitor construction progress, detect deviations, or flag safety concerns. With cross-device accessibility, site managers can review findings on tablets in the field, while engineers back in the office can dive into the finer details.

For teams conducting regular drone surveys, Anvil Labs simplifies workflows by integrating with AI tools for automated change detection. It can compare drone flights taken days or even months apart to identify changes on-site. Additionally, its secure sharing and access controls let project teams provide contractors, inspectors, or clients with the right level of access while keeping sensitive data protected.

With pricing at $99 per month for the Asset Viewer plan or $49 per project for Project Hosting, Anvil Labs offers an affordable way to transform raw drone data into organized, shareable insights. This ensures industrial projects stay on track and operate efficiently.

Conclusion

Edge AI is revolutionizing drone navigation in industrial settings by enabling drones to process data directly onboard using advanced hardware like NVIDIA Jetson and Qualcomm Snapdragon. This capability allows drones to detect obstacles and adjust their flight paths in milliseconds, even in areas with limited connectivity.

Studies highlight impressive advancements: edge-assisted frameworks reduce navigation latency by 28.06% and extend flight range by up to 27.28%. At the same time, deep learning techniques have minimized collision avoidance table sizes from 5 GB to just 10 MB, all without compromising safety. These breakthroughs are already making a noticeable impact in real-world applications.

For example, the October 2024 launch of the AIVIO system and the May 2025 deployment of edge AI for construction site surveillance showcase how these technologies are being effectively implemented.

To capitalize on these advancements, industrial operators should focus on integrating advanced sensors with powerful edge processing systems. Platforms like Anvil Labs are paving the way by offering secure data management for complex datasets, including 3D models, LiDAR scans, thermal imaging, and orthomosaics. These tools transform raw drone footage into actionable insights, enhancing operational efficiency.

The move toward edge AI equips drones to make smarter decisions in dynamic environments. As industrial operations become increasingly intricate, combining local processing power with tools like those from Anvil Labs will shape the future of autonomous drone technology.

FAQs

How does Edge AI enhance drone navigation in areas with limited connectivity?

Edge AI empowers drones to handle essential data processing right on the device itself, cutting out the need for continuous cloud communication. This capability ensures instant obstacle detection and flight path adjustments, even in remote locations with little to no network coverage.

With vision and decision-making models running locally, drones can adapt to changing conditions in real time. Instead of sending massive amounts of raw sensor data to the cloud, they transmit only the most critical insights when required. This approach minimizes delays and boosts overall efficiency.

How do onboard processors enable drones to make real-time decisions?

Onboard processors are essential for drone navigation, handling AI models and sensor-fusion algorithms directly on the device. By performing these computations locally, drones achieve low-latency responses, enabling them to perceive their environment, chart routes, and make autonomous decisions without depending on remote servers.

This real-time processing allows drones to react swiftly to changing conditions, like dodging obstacles or fine-tuning flight paths, which boosts both safety and operational efficiency.

How do edge servers improve drone navigation and performance?

Edge servers significantly boost drone performance by taking on compute-heavy tasks, like AI processing, using nearby high-powered hardware. This means drones don't have to rely entirely on their onboard systems, which helps cut down on latency, saves battery life, and frees up memory. The result? Drones can react faster to shifting conditions.

On top of that, edge servers allow for real-time adjustments to things like image resolution and data compression. This not only improves navigation accuracy but also helps extend the drone's flight range. With edge computing in play, drones are better equipped to make quick, informed decisions in challenging and unpredictable scenarios.